Video anomaly detection (VAD) is a vital yet complex open-set task in computer vision, commonly tackled through reconstruction-based methods. However, these methods struggle with two key limitations: (1) insufficient robustness in open-set scenarios, where unseen normal motions are frequently misclassified as anomalies, and (2) an overemphasis on, but restricted capacity for, local motion reconstruction. To overcome these challenges, we introduce a novel frequency-guided diffusion model with perturbation training. First, we enhance robustness by training a generator to produce perturbed samples targeting the weakness of the reconstruction model. Second, we employ 2D Discrete Cosine Transform (DCT) to separate high-frequency (local) and low-frequency (global) motion components. Extensive experiments on five VAD datasets demonstrate state-of-the-art performance.

Figure 1. (a) Training data consists of seen normal motions; testing data contains unseen normal and abnormal motions. (b) Frequency analysis reveals that motion retaining only 70% low-frequency information remains similar to the original in global structure.

Figure 2. Overview of FG-Diff. Training includes: (1) minimizing MSE to train the noise predictor, and (2) maximizing MSE to train the perturbation generator. During testing, high-frequency information of observed motions and low-frequency information of generated motions are fused.

Figure 3. Training: Adversarial training with perturbation generator and denoiser. Inference: DCT separates motion into global (low-freq) and local (high-freq) components for accurate reconstruction.

Figure 4. Perturbation training illustration. (a) Green and yellow points denote original and perturbed motions. (b) The reconstruction domain is extended by perturbation training.

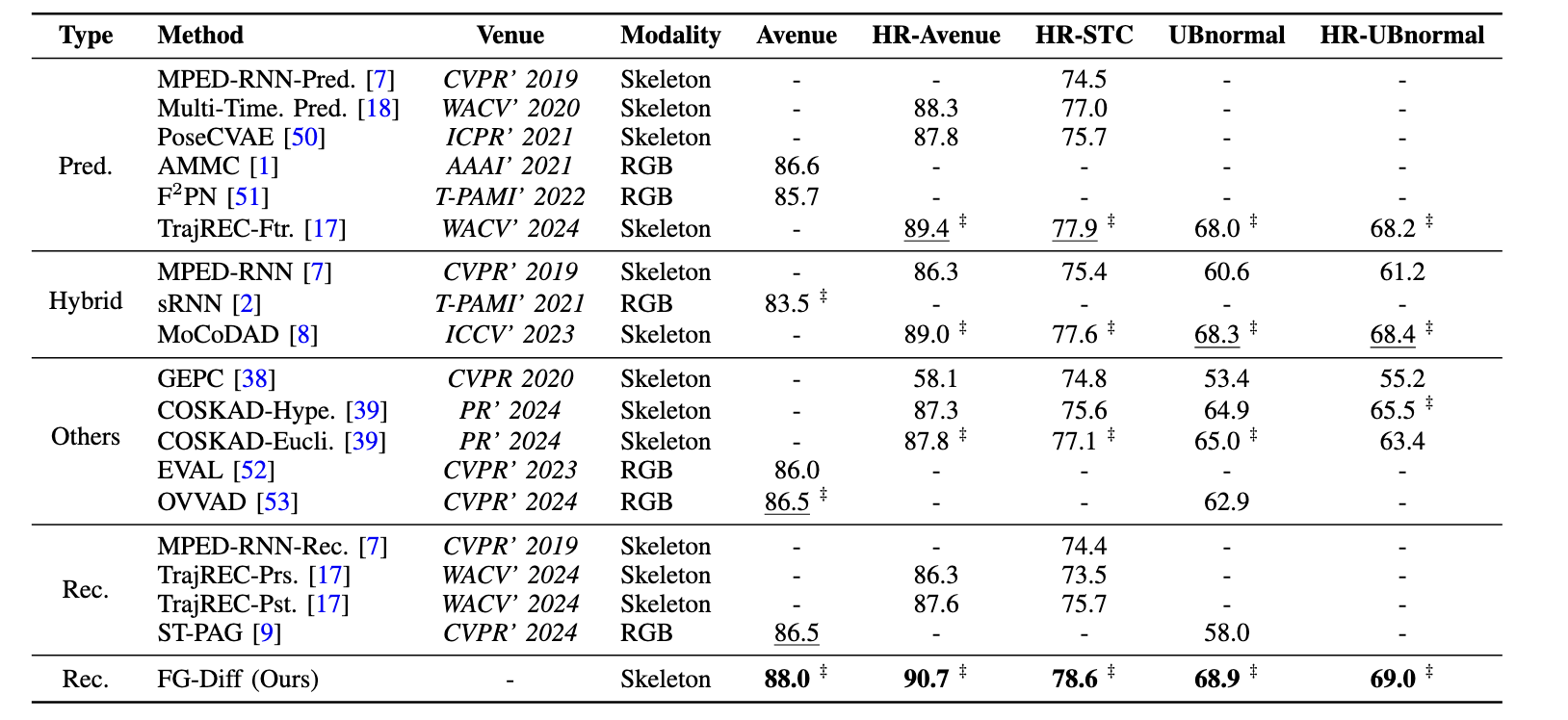

Table 1. Comparison with state-of-the-art methods. Bold: best results; Underlined: second-best; ‡: best under each paradigm.

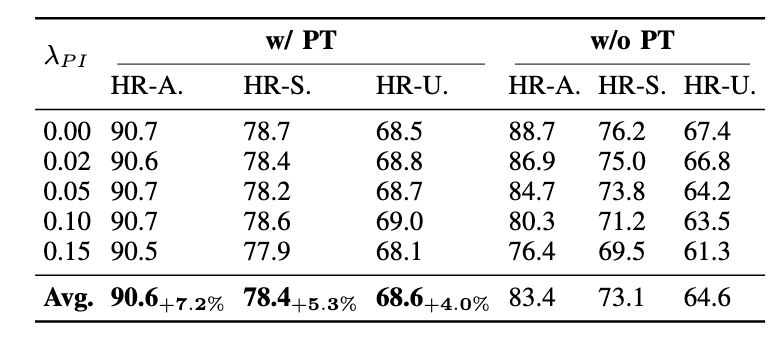

Table 2. Robustness analysis of perturbation training. "PT" denotes perturbation training; "λPI" represents perturbation intensity.

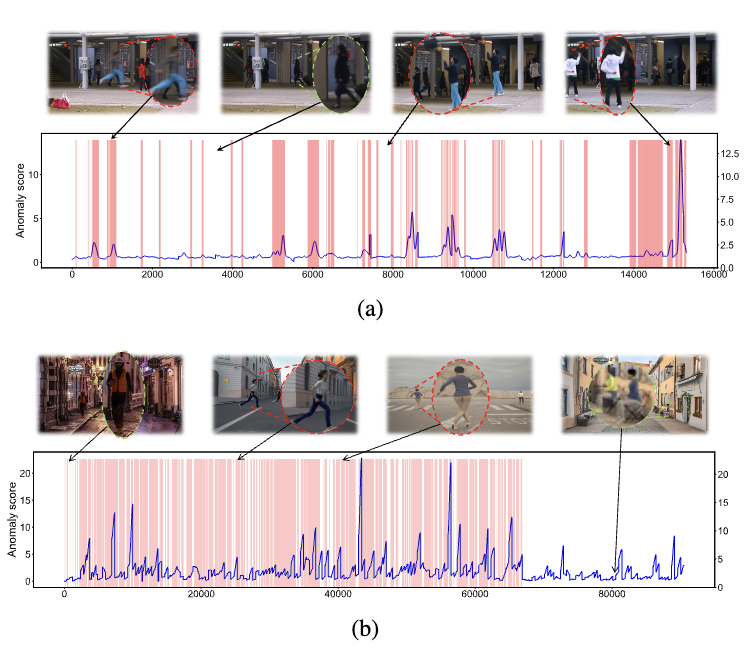

Figure 5. Anomaly score curves on Avenue and HR-UBnormal datasets. Red circles: abnormal events; Green circles: normal events.

Left: ground truth labels. Right: detection results.

@article{tan2024fgdiff,

title={FG-Diff: Frequency-Guided Diffusion Model with Perturbation Training for Skeleton-Based Video Anomaly Detection},

author={Tan, Xiaofeng and Wang, Hongsong and Geng, Xin and Wang, Liang},

journal={arXiv preprint arXiv:2412.03044},

year={2024}

}