📝 Abstract

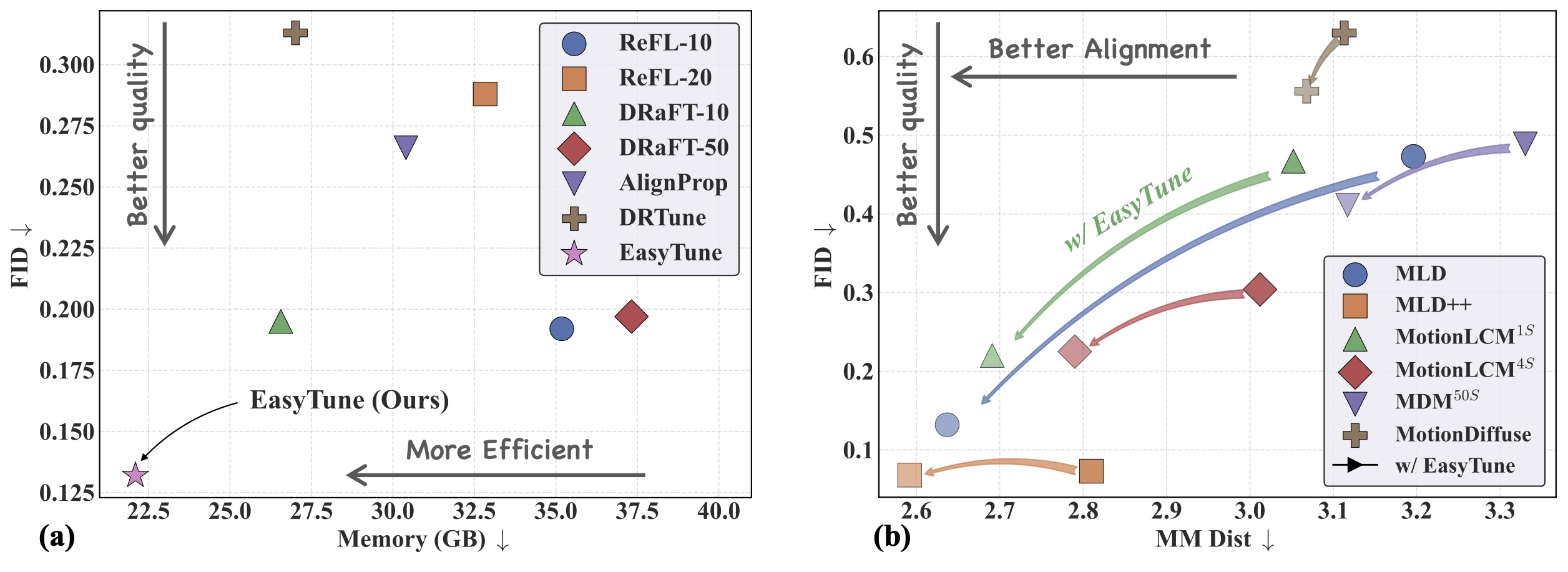

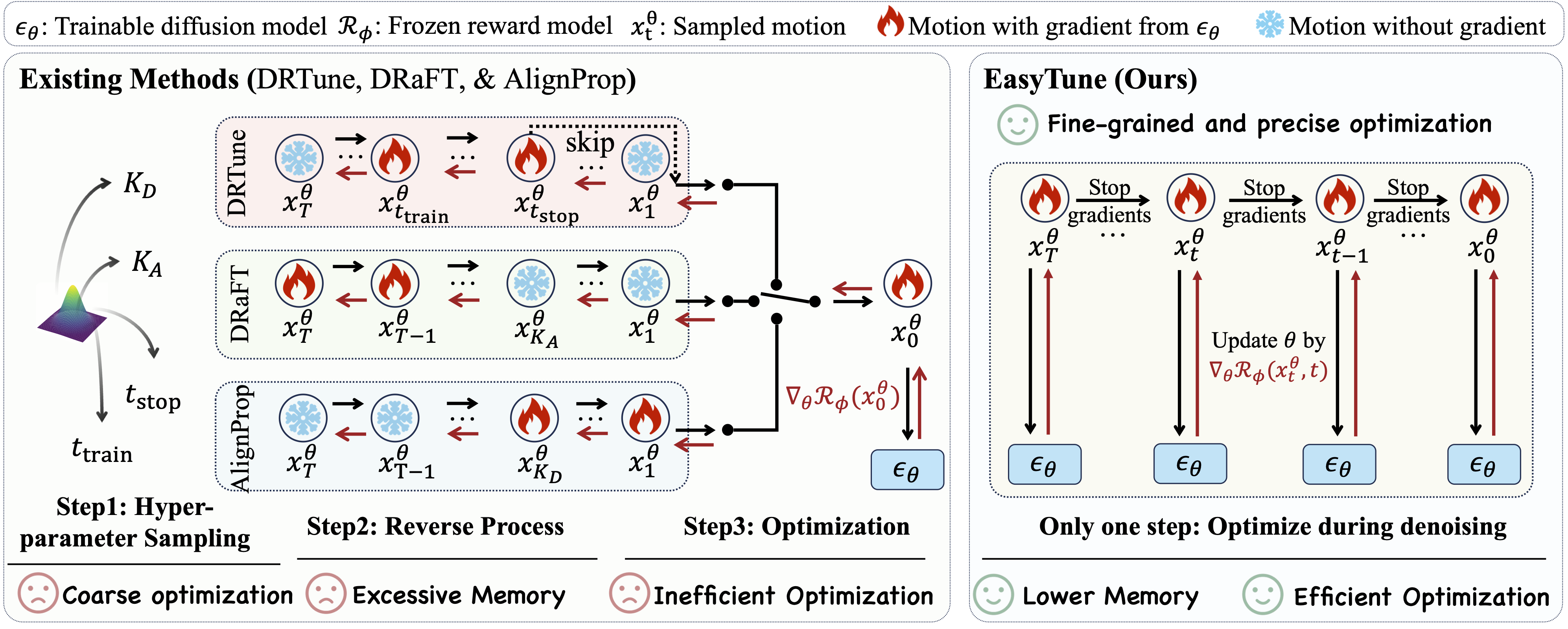

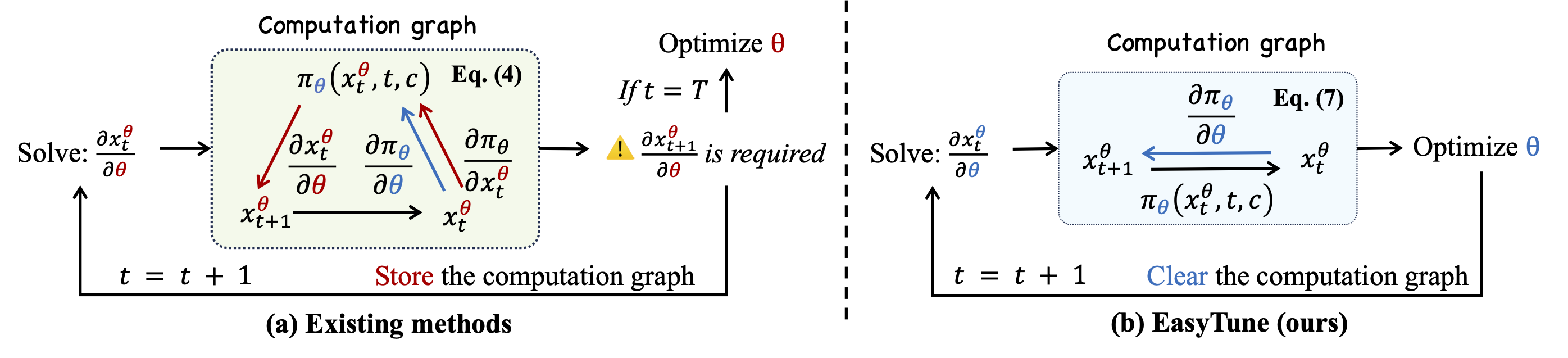

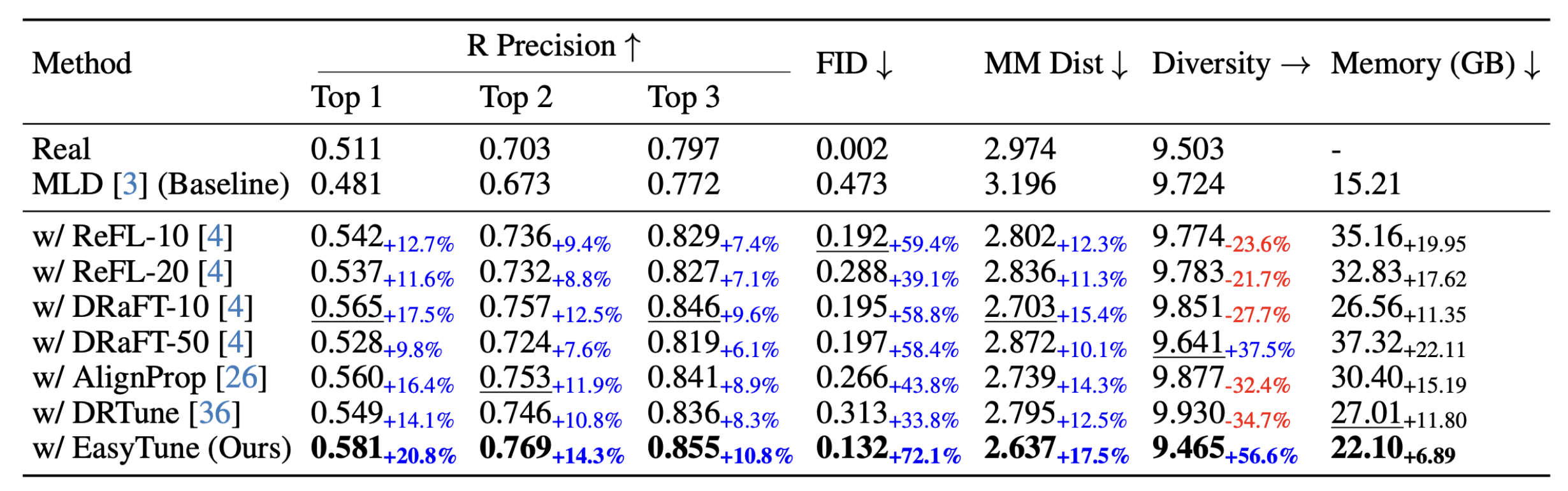

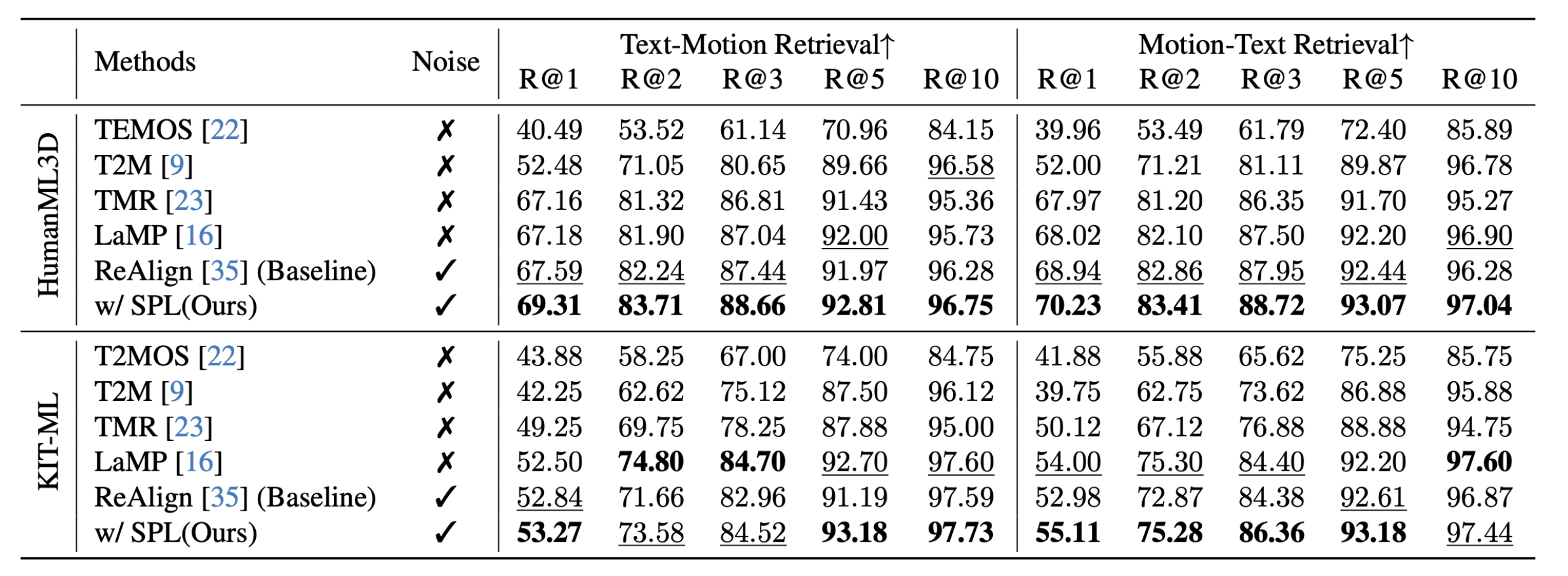

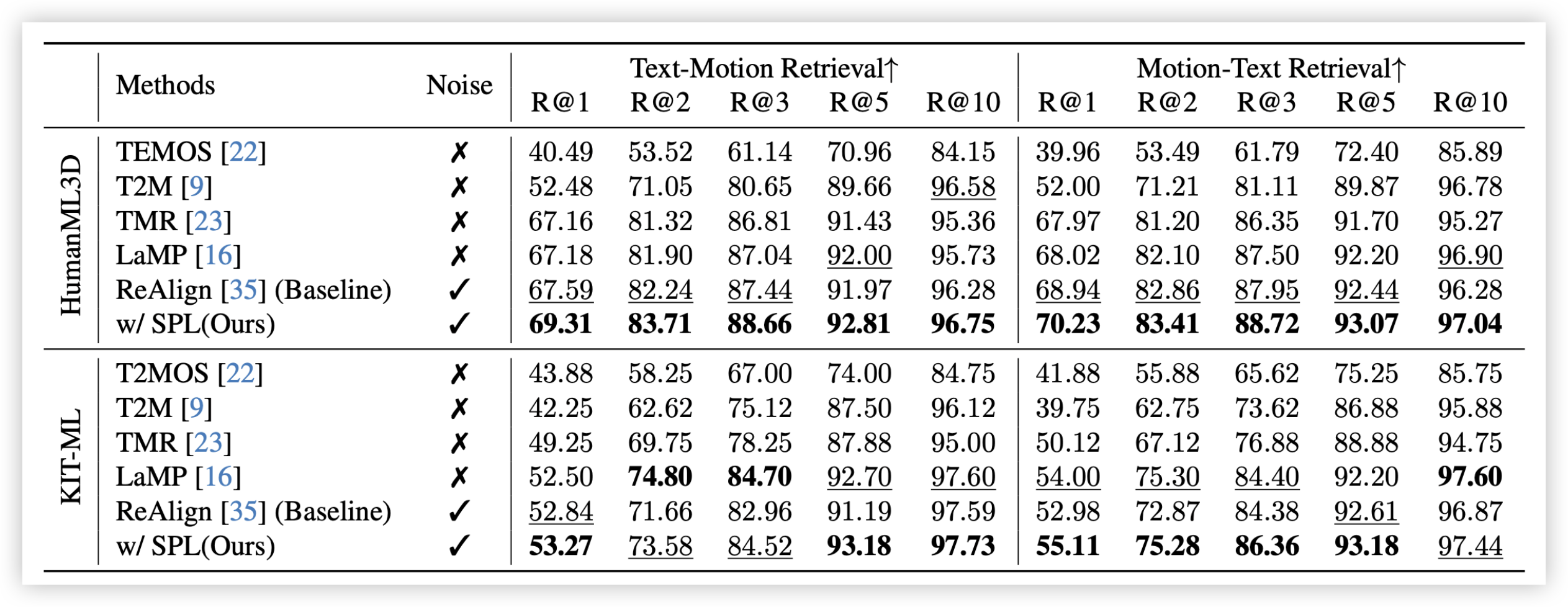

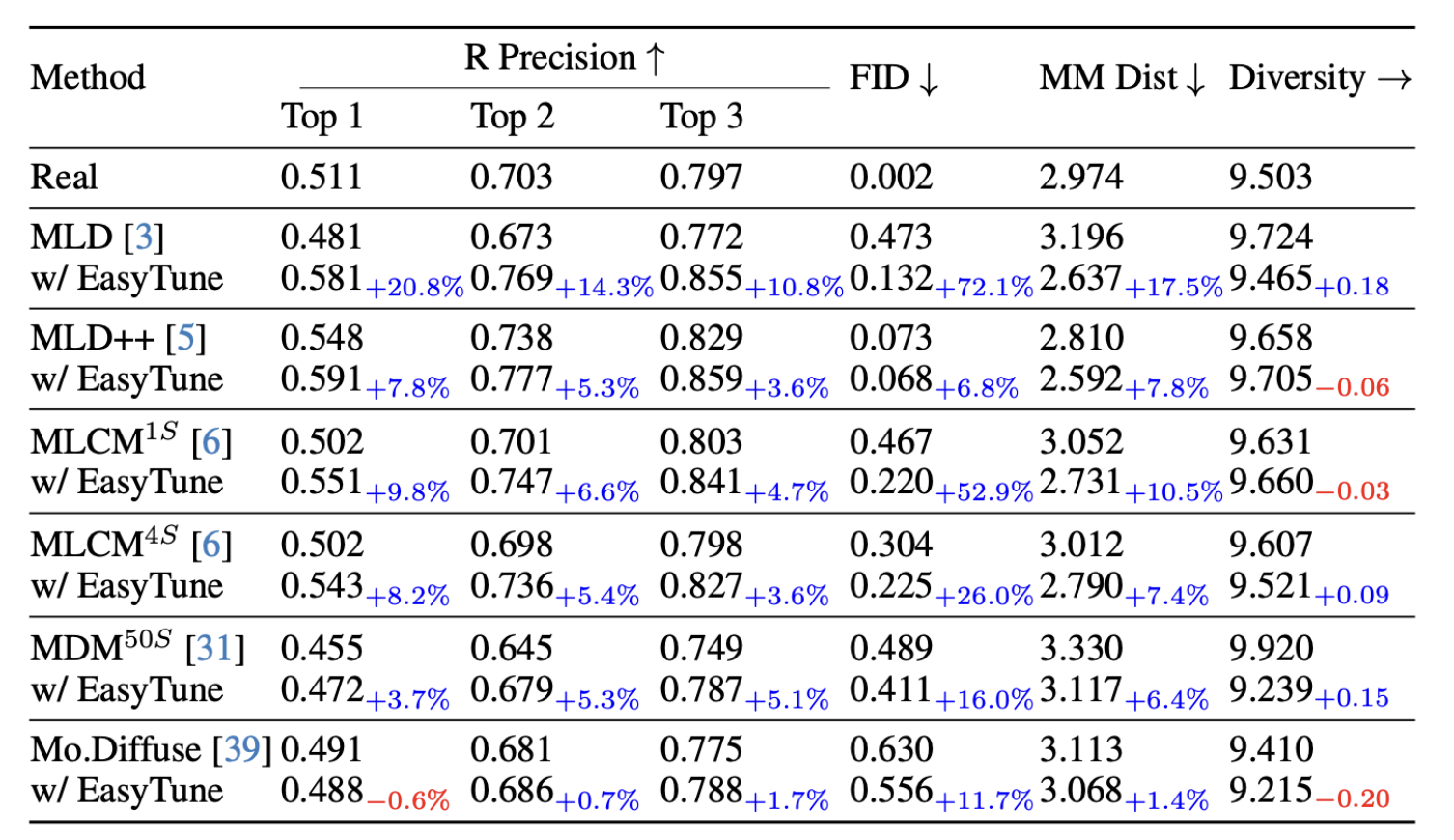

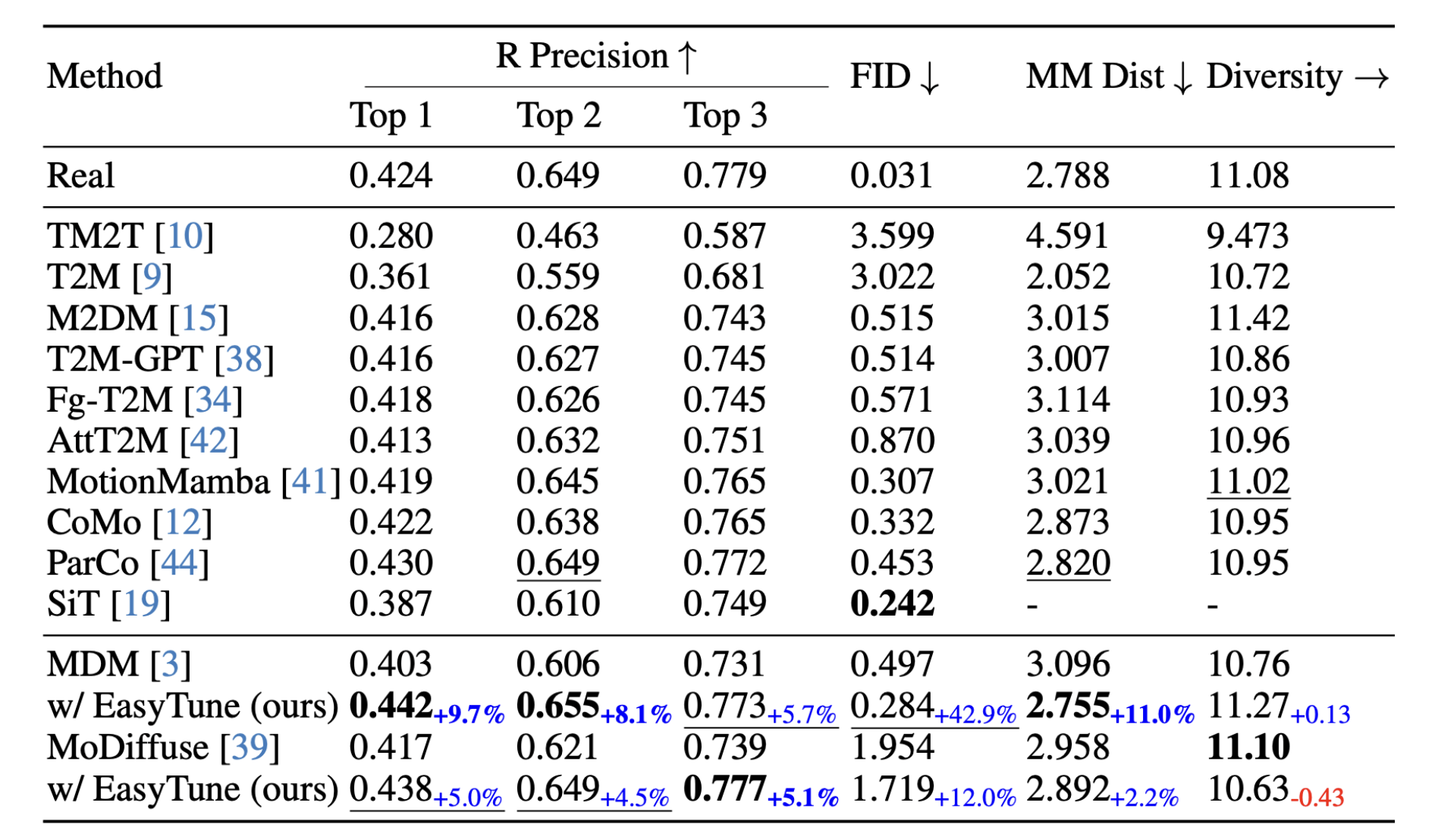

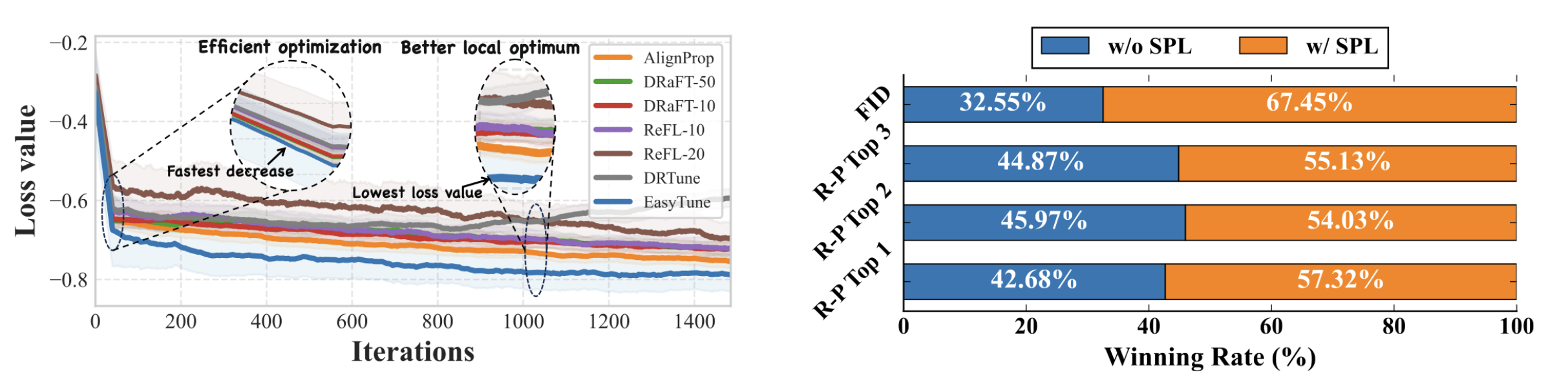

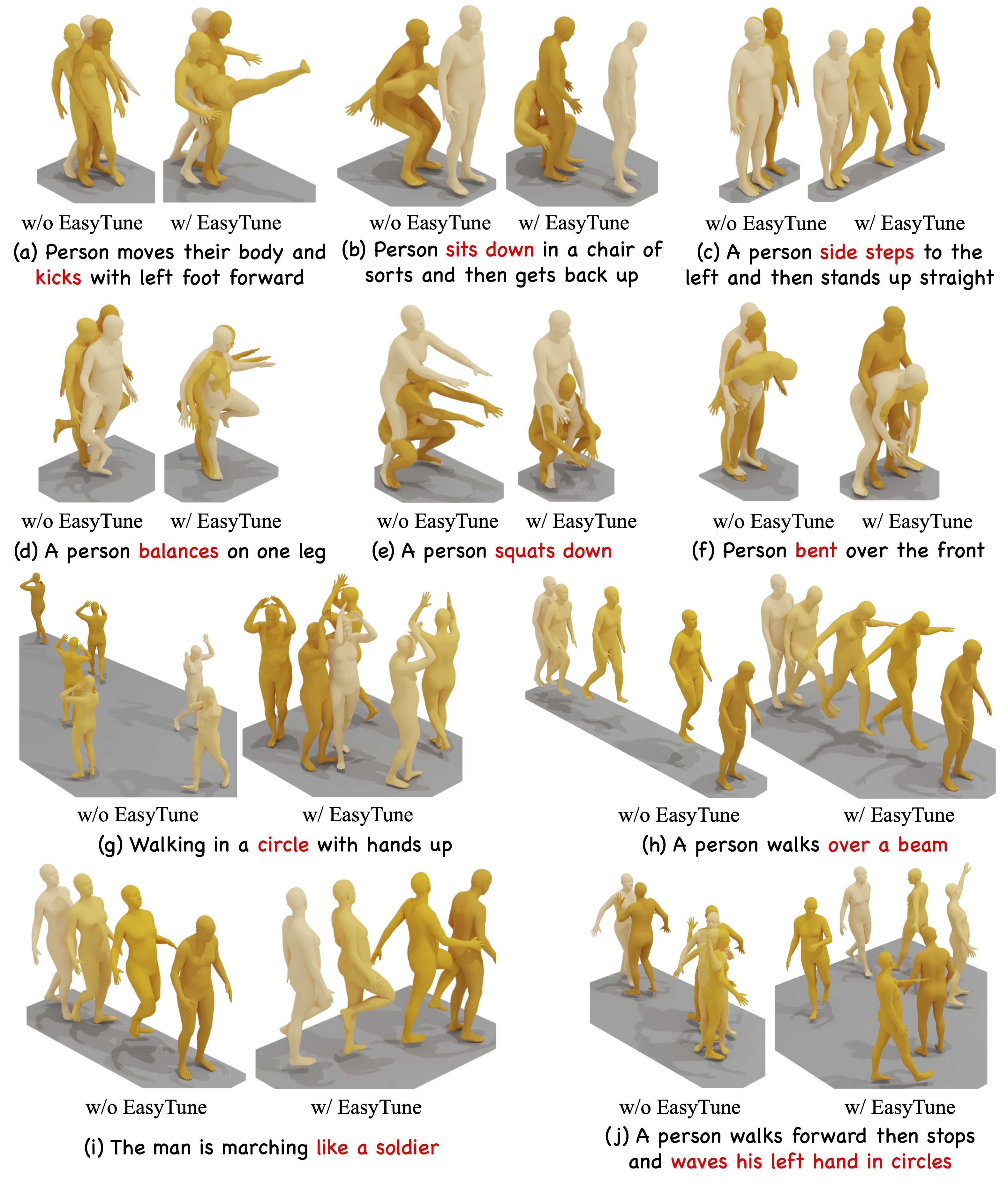

In recent years, motion generative models have undergone significant advancement, yet pose challenges in aligning with downstream objectives. Recent studies have shown that using differentiable rewards to directly align the preference of diffusion models yields promising results. However, these methods suffer from inefficient and coarse-grained optimization with high memory consumption. In this work, we first theoretically identify the fundamental reason of these limitations: the recursive dependence between different steps in the denoising trajectory. Inspired by this insight, we propose EasyTune, which fine-tunes diffusion at each denoising step rather than over the entire trajectory. This decouples the recursive dependence, allowing us to perform (1) a dense and fine-grained, and (2) memory-efficient optimization. Furthermore, the scarcity of preference motion pairs restricts the availability of motion reward model training. To this end, we further introduce a Self-refinement Preference Learning (SPL) mechanism that dynamically identifies preference pairs and conducts preference learning. Experiments show that compared with DRaFT-50, EasyTune achieves an 8.91% improvement in alignment metric (MM-Dist) while only incurring 31.16% of the additional memory overhead.